Today's Mission: Scrape date from a JavaScript powered table.

In a world full of people using modern solutions such as Selenium, the easiest solution may just be the oldest… using your brain + cURL.

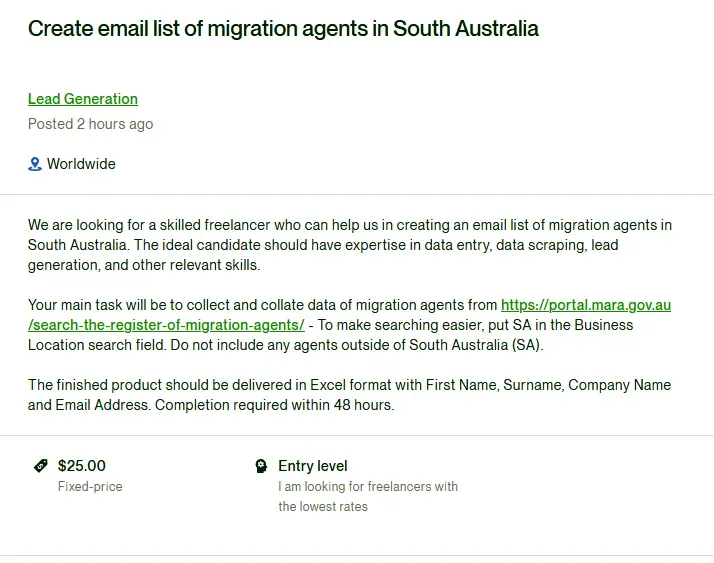

I saw this contract tonight on UpWork.

Title: “Create email list of migration agents in South Australia”

Description: “We are looking for a skilled freelancer who can help us in creating an email list of migration agents in South Australia. The ideal candidate should have expertise in data entry, data scraping, lead generation, and other relevant skills.

Your main task will be to collect and collate data of migration agents from https://portal.mara.gov.au/search-the-register-of-migration-agents/ - To make searching easier, put SA in the Business Location search field. Do not include any agents outside of South Australia (SA).

The finished product should be delivered in Excel format with First Name, Surname, Company Name and Email Address. Completion required within 48 hours.”

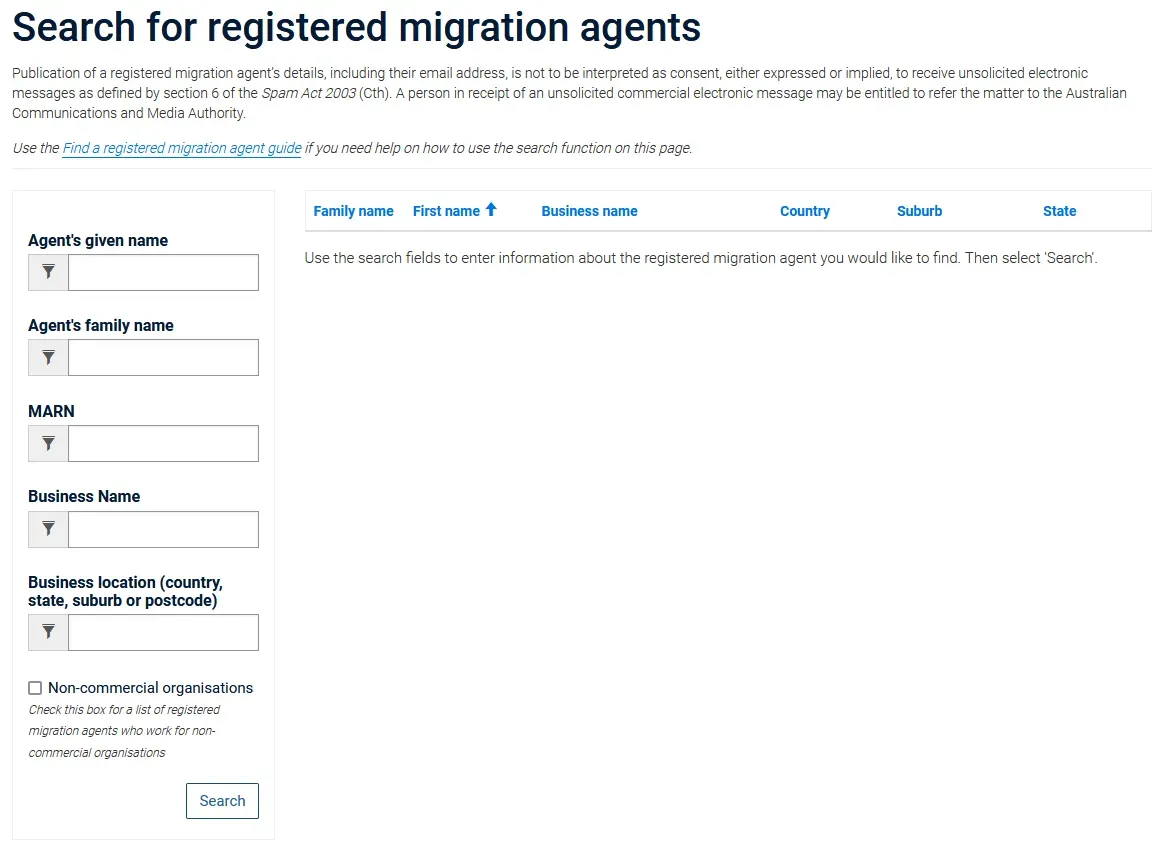

Upon loading the Department of Home Affairs site, we’re greeted with a table and a bunch of filters.

Censored since I’m not doing free advertising

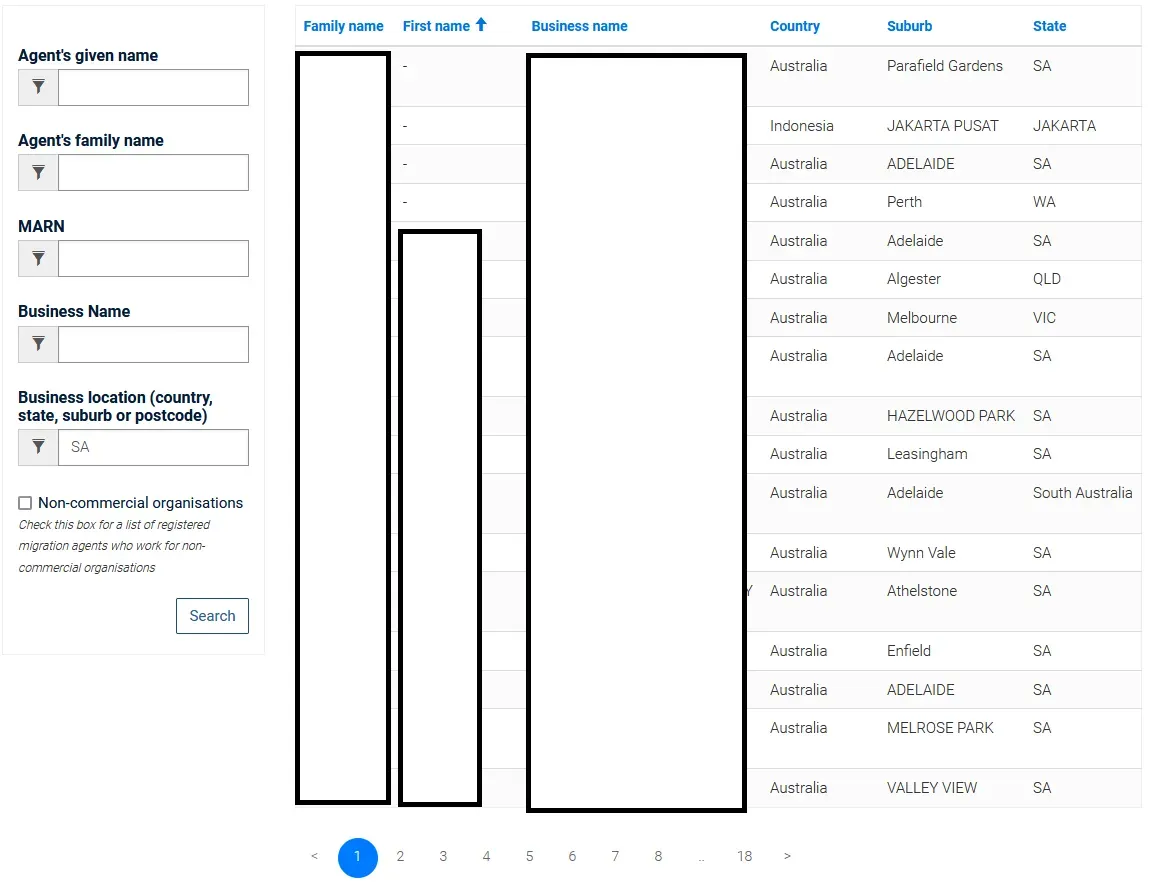

Doing a search with SA in the filter gives us a table with 17 loaded record and 18 pages. So about 306 records total.

We can see that the table is dynamically loading data because of the load between hitting page numbers and the page numbers themselves being links to functions (on hover), meaning you can’t tab through the pages:

The other issue is that the contract asks that we grab the Email Address as well, which isn’t plainly displayed. You can only get the email address by hitting each of the surname/family name links in the left-most column.

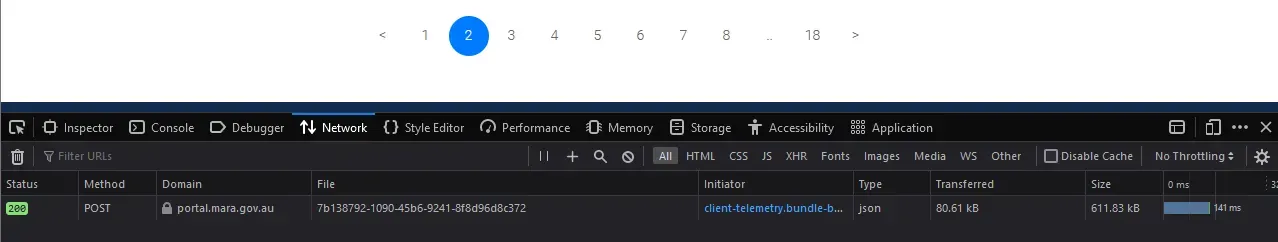

Going into the network tab, we can take a look at what requests are being sent back and forth when hitting buttons on the page

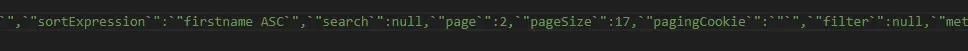

The request itself contains the page we’re loading, and the amount of data to be loaded per page (PageSize: 17 and 17 rows from the previous observation. Bingo).

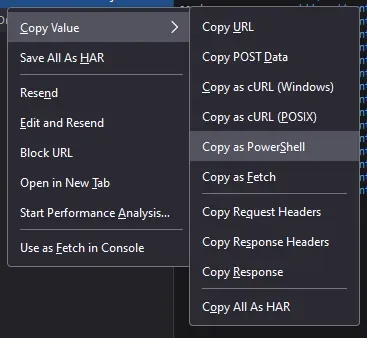

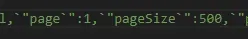

Using Firefox, I’ll take a copy of the command that was sent in Powershell:

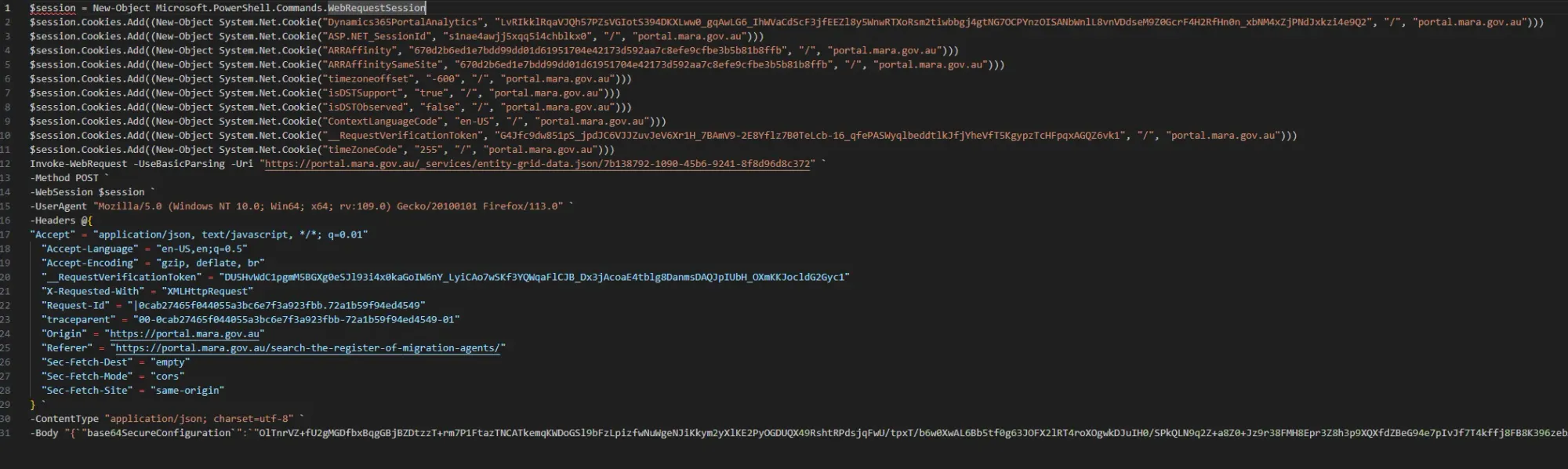

That’s a big ass command

Most of this is the raw data for the command, so lets take a look at the end and change the variables that we want, which are at the very end. Changing it to page 1, and that we want 500 results.

Ba da ba boom…

Pow!

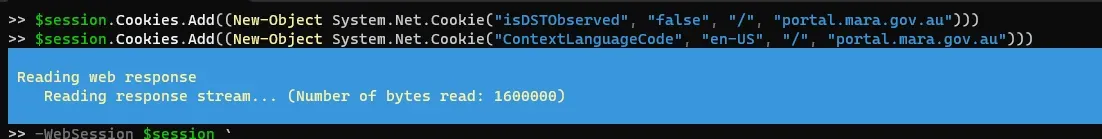

Pop open terminal and wrap the command as a variable and let’s ask it to print the content. Like:

$(command).Content

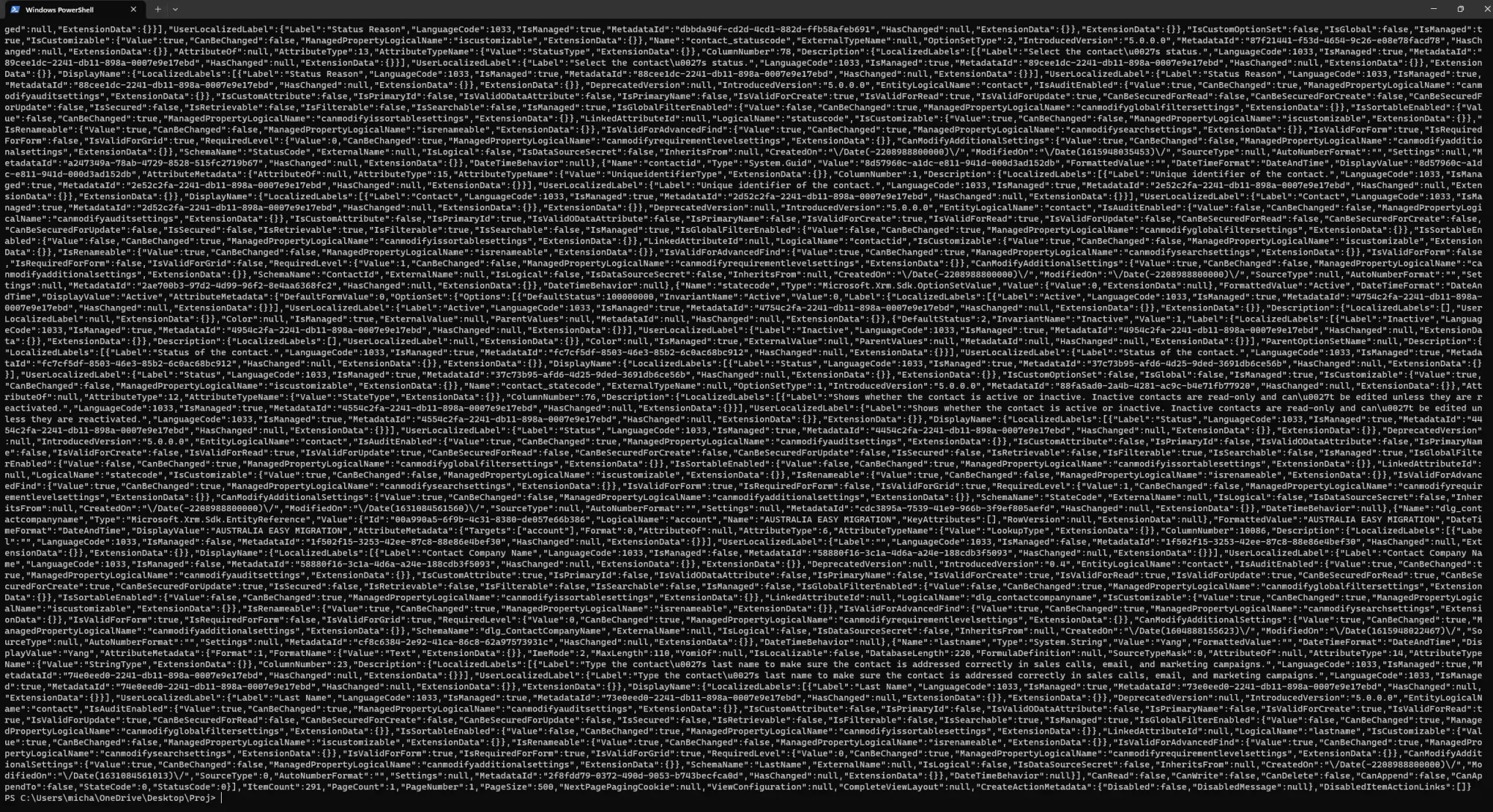

Rad, now we’re getting a big response, in JSON form.

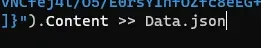

Let’s pipe that into a JSON file.

We have first name, last name, address, business name, phone numbers, and email addresses, and way more.

I won’t go into much more, but I know using jq and some scripting, I could deliver this as a CSV, or even better, format an excel/sheets file to take this raw data and fill other sheets with proper formating. I could also change the filtering so that we not only grab SA, but every single agency on record.

I didn’t end up getting hired for this job since, as is typical of Upwork and gig sites, even a $20 gig can be met with $2 offers from people in 3rd world countries. That’s a problem for another time.